Quantification

Quantification is the name of the process allowing to convert absolute durations (for instance, in milliseconds) into a structured rhythmic sequence of measures with metrics and pulse subdivisions.

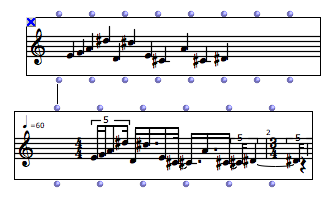

In other word and with OpenMusic objects, this is basically what happens when converting a chord-seq, or a MIDI file oject into a voice.

Quantification is not an easy task : it implies preliminary information and adapted approximations in order to be performed correctly. When importing MIDI files into a voice, for instance, the tempo and metrics information can be used to better deduce the rhythm corresponding to the raw sequence of onsets encoded in the MIDI file.

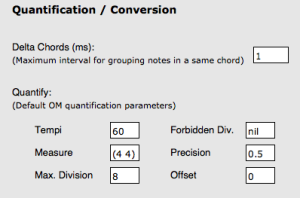

In order to guide the quantification processes, some general parameters can be set in th OM Preferences ( |

Even though, some notes in the original sequence may be lost during quantification. In this case, the following message will appear :

Warning: with the given constraints, n notes are lost while quantizing

In this case, the quantification parameters should probably be changed in order to better match the rhythmic constraints with the initial durations of the sequence.

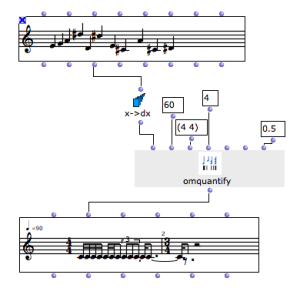

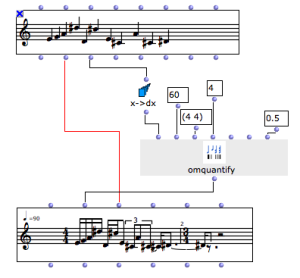

When specific or precise quantification processes need to be performed, you will generally need to use the OMQUANTIFY box in order to convert a duration list (typically coming from chord-seqs or similar data) into a rhythmic tree suitable to a voice object initialization.

The X->DX function can be used to compute a list of durations starting from the list of onsets of a chord-seq object.